Alternative assessment

As the scholarly publishing world adopts altmetrics, the ways in which the data is used are developing fast, reports Rebecca Pool

Altmetrics surfaced in 2010 from a tide of scholarly dissatisfaction. For many, peer-review was slow, citation counting insufficient, and the Journals Impact Factor dubious.

Factor in the explosion of data that researchers wanted to access, assess and, if relevant, analyse, and traditional metrics were floundering. However, Altmetric, Plum Analytics and ImpactStory, among others, cast out a lifeline.

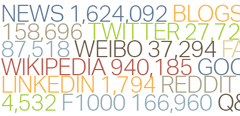

Providing online tools for researchers and institutions to measure article-level metrics via social media data, article views, downloads and more, these organisations delivered a new way for researchers to sift through information while assessing interest in research.

But, over time, the premise of altmetrics has changed. As Euan Adie, chief executive of UK-based Altmetric, highlights: ‘If you read the original 2010 manifesto on altmetrics, discovery and filtering information featured highly. Also, researchers were generally interested in the attention work received.’

However, industry developments such as the rise of open-access publishing, have led to providers of research funds demanding more information on an article’s actual impact on society, as well as its kudos in its relevant field of research. As a result, suppliers of altmetrics are changing tack.

‘We’re now moving away from social media sources, which are more about the attention your work is getting, towards other sources that indicate the impact your work is actually having,’ says Adie.

Such sources could include policy documents, clinical guidelines or reviews in Faculty of 1000, as well as open peer review systems such as Publons and PubPeer. And, as Adie explains: ‘It is much, much harder to get impact-related data.... but more funders have a desire to see both attention and impact as well as produce quality research, and altmetrics can measure these factors pretty well.’

Amid this backdrop, demand for one of Altmetrics’ primary products, Altmetric Badges, continues. These visual ‘doughnuts’ provide an instant record of online attention, and in a recent development, online digital repository, Figshare, has adopted the software for its academic research.

However, Altmetric is also updating its Explorer web-based platform to reflect industry developments. Designed to provide publishers, institutions and funders with more detailed information on online interest, a newer version will provide a better user experience and easier analysis.

As part of the drive to focus on impact, Altmetrics has added Scopus citation counts to its Explorer package for publishers and institutions. This enables users to view the Scopus citation counts across any Altmetric-tracked research as well as mentions from policy documents, social networks, Wikipedia and other online media outlets and forums.

For Adie, the addition of citations is now crucial. ‘We’re really trying to push a message that our metrics are complementary to traditional metrics, and one doesn’t replace the other,’ he says. ‘Citations still are a good way of getting a broad indication of the scholarly influence of your work, and answers the important question; are your peers using it? But the point is that our metrics help you get a more rounded picture of attention and impact, and pick up information that citations can’t.’

Andrea Michalek, founder of Plum Analytics, agrees that both traditional and alternative metrics are absolutely necessary. ‘Metrics around research are on a continuum. We have traditional metrics, usage and citation, and newer categories of metrics include social media, mentions and captures,’ she says. ‘By gathering data in each of these categories, we can develop the most complete and compelling stories.’

When Michalek founded Plum Analytics in 2012, she wanted to track global scholarly research output and calculate metrics to reveal how each individual output was ‘received’ online. Like Altmetric, her organisation developed a host of products - called PlumX - to gather different kinds of metrics including usage, mentions, captures, social media as well as citations.

Then, in 2014, Plum Analytics was acquired by EBSCO Information Services, and earlier this year, a new product, ‘Plum Print’ was added to the EBSCO Discovery Service and EBSCO host databases. In a similar vein to the Altmetric Badge, this provides a swift visualisation of article-level altmetrics gathered for a piece of research.

Crucially, for EBSCO users, this means they can now use Plum Print to visualise the research impact of myriad items, including articles, chapters from books and institutional repository material. And for Michalek, this adds to the increasing need to provide data on the impact research has on society: ‘As more and more researchers are exposed to highly visual Plum Print information, I believe they will begin to understand, and then expect, to have more information on the engagement that is taking place around a piece of research. We will move away from a world where impact of a piece of work is largely assessed by the journal that it is published in.

Books and beyond

But what if your research output isn’t in a journal, and instead, is a chapter in a book, or even a book itself? As Martijn Roelandse, manager of publishing innovation at Springer points out, the world of books has a very different take on metrics.

‘We know how many citations a journal article has in which database and its number of tweets and blog posts, but very little has been known about the impact and reach of a book or chapter,’ he explains.

Altmetric’s Adie agrees: ‘We talk about altmetrics being a complement and that’s absolutely true for journals, but not books. Often citation indexes don’t even exist and there’s literally no data available to show a book is accepted by its peers, was used in a particular way, or was getting any attention.’

Given this, Springer and Altmetrics jointly developed Bookmetrix, an online platform that offers title and chapter-level metrics across all of Springer’s books. Launched last year, the platform reports how often a book or chapter is cited, downloaded as well as mentioned, reviewed or shared online from sources such as Twitter, Facebook, Wikipedia and Mendeley reference manager readership statistics.

‘For citations we chose to use data from Crossref,’ says Roelandse. ‘Thomson Reuters and Scopus index only up to 20 per cent of books, as their focus has been on journal articles. But we wanted to build something so I could go back to authors and confidently say, “look, your book has been indexed and cited” and now [with Crossref] we have 100 per cent coverage for all our books.’

And just a little more than one year on, Bookmetrix has been well-received. Having reached 230,000 books and some 4,000,000 chapters, more than 1,500 authors have tweeted about their Bookmetrix score. What’s more Springer is currently working with Dutch international scholarly publisher, Brill, to bring the Bookmetrix platform to its books content.

Roelandse is now hopeful that the new data on books can be used by researchers to support their CVs and funding applications, and of course, provide more credit for the books and chapters written.

‘We really want books and chapters to be taken more seriously in research evaluation and university assessments,’ he says. ‘We want to show they are cited and used as much as journal articles and are part of the academic realm that has been neglected by funders and universities.’

Given Bookmetrix’s success, Roelandse is now working on a second phase of development for the platform, that will include new features such as an ability to print a book report and add this to a REF or grant proposal.

‘Downloading this metrics data into a report is a killer app, we had 10,000 downloads in the first three weeks,’ he says.

And for Altmetric, its foray into the world of books with Springer has brought new opportunities. Since the launch of Bookmetrix, the company has been building up its coverage of book publisher platforms supporting ISBNs as unique identifiers for books as well as collecting mentions of books listed on Google Books.

Then, in April this year, ‘Altmetric Badges for Books’ was launched with Taylor & Francis’ Routledge Handbooks Online being the first customer. Here, its well-known doughnut-shaped badges are embedded on publisher websites to display a real-time record of mentions of a book or chapter on Twitter, Facebook, Wikipedia, YouTube, government documents, Mendeley and news sites.

According to Peter Harris, digital product manager at Taylor & Francis, the move made absolute sense. ‘As I was talking to more researchers, I found that within our edited collection, chapter authors didn’t feel like they were getting enough merit for their chapters,’ he says. ‘At the same time, many of our authors had been asking a lot about alternative, rather than traditional, metrics.’

And, a few months on, Harris is thrilled with the results. Pointing to the Routledge International Handbook of Ignorance Studies, he highlights how this publications has, to date, had 10 tweets, fourteen Mendeley readers and a mention from media outlet, The Guardian. ‘We’ve seen the conversations that authors have had about the fact this this has been picked up,’ says Harris. ‘[Badges for Books] is going to have a big impact on how authors promote content, as they’ll realise there is a point to promoting on social media.’

The digital product manager also believes that altmetrics success in books provides more evidence of books and journals industries merging closer together, and is confident that Taylor & Francis could roll out the software across the rest of its books collection. However, he is under no illusion that, at least for books, altmetrics is in its infancy.

‘Alternative metrics are still relatively unknown in the books world and we are right at the beginning of this for book,’ he says. ‘It’s going to be interesting to see how much this will take off.’

But as altmetrics are increasingly adopted across the entire world of scholarly publishing, metrics suppliers are also providing words of caution. Adie, for one, is concerned about his responsibility as a provider of altmetrics. To this end, his company recently released the guide ‘What are altmetrics?’ and is funding research into the understanding of these metrics as part of the wider scholarly agenda.

As he puts it: ‘We need to educate the market to make sure we’re not over-hyping anything or encouraging any kind of misuse of metrics.’

Likewise, Plum Analytics’ Michalek highlights how altmetrics are now more commonplace in the tenure and promotion processes of some universities, grant applications, and other forms of assessment: ‘It is critical at this juncture, to not provide a simplistic all-in-one score that repeats the mistakes of the past with Journal Impact Factor. We must provide more and more indicators and simple tools to allow decision makers the ability to use them.’