A look at prediction markets

13 November 2019

Issue:

Jacqueline Thompson, Marcus Munafò and Ian Penton-Voak introduce a tool to help assess research quality

Assessing the quality of research is difficult. Jisc and the University of Bristol are partnering to develop a tool that may help institutions improve this process.

To attract government funding for their crucial research, UK universities are largely reliant on good ratings from the Research Excellent Framework (REF) – a process of expert review designed to assess the quality of research outputs. REF scores determine how much government funding will be allocated to their research projects. For instance, research that is world-leading in terms of originality, significance and rigour, will be scored higher than research that is only recognised nationally.

Considerable time is spent by universities trying to figure out which research outputs will be rated highest (4*) on quality and impact. The recognised ‘gold standard’ for this process is close reading by a few internal academics, but this is time-consuming, onerous, and subject to the relatively limited perspective of just a few people.

It’s far better to include the insights of more people – which is where prediction markets come in. This online, crowd-sourcing mechanism has been gathering steam in assessing academic research, and has, for example, been remarkably accurate at predicting which social science experiments will replicate, or how various chemistry departments would rank in the REF.

How prediction markets work

Prediction markets capture the ‘wisdom of crowds’ by asking large numbers of people to bet on outcomes of future events – in this case how impactful a research project will be in the next REF assessment. It works a bit like the stock market, except that, instead of buying and selling shares in companies, participants buy and sell virtual shares online that will pay out if a particular event occurs – for instance, if a paper receives a 3* or above REF rating.

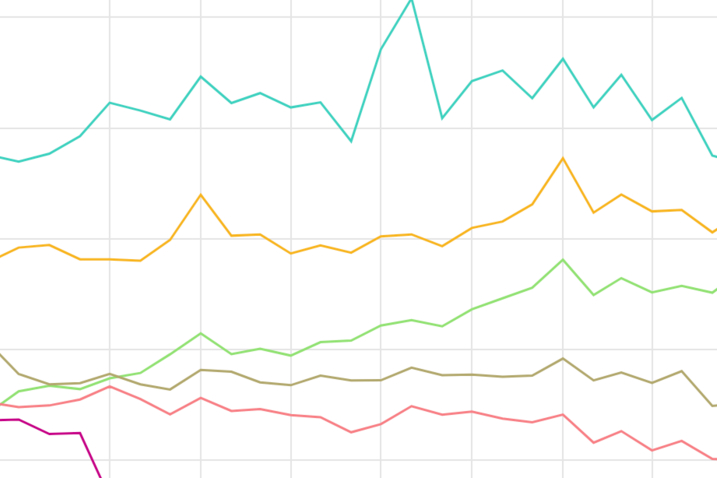

Markets usually run over the course of a few days or weeks, during which time participants can update their bets and compete to earn points by buying low and selling high. After the market closes, the final output is a list of ‘market prices’ (one for each paper). A paper’s market price represents the group’s collective confidence that the paper will achieve a certain threshold of ratings.

Benefits over other assessment methods

Prediction markets have several advantages over other assessment methods. Crucially, the fine-grained market prices allocated to various elements of the research assessed allow the papers to be ranked against each other. And, in comparison to most other assessment methods, such as surveys or close-reading panels, prediction markets have a built-in mechanism for weighting participants’ confidence in their own ratings. Namely, participants can choose to bet (or not bet) on whichever papers they like, plus they see real-time information on the group’s overall confidence in each paper, which they can use to inform their bets.

Our Jisc pilot project

Over the past six months, Jisc ran three pilot markets at the University of Bristol, in the psychology, biology and chemistry departments. The pilots showed promising results: the outcomes (market prices) from all three correlated highly with the ratings that were given by the internal REF panel.

The psychology market was also compared against a machine learning algorithm trained on various metrics; the machine learning results correlated at similar levels with both the prediction market and the internal REF panel. Crucially, these levels of correlation suggest that all of these methods are picking up relevant information, but the underlying information that each reflects is somewhat different.

Judging by our discussions with the REF co-ordinators from these Bristol University departments, we envision that the results of the prediction markets will not take the place of the traditional close-reading approach, but instead will be most useful as an extra source of information for uncertain or borderline cases.

We also measured participants’ feedback on the experience of taking part in the markets. After all, these are busy academics, who are often deluged with requests to fill out surveys or help with assessment exercises. We were pleased to find that, overall, participants reported that they felt engaged with the process and found it enjoyable – one even reported playing the prediction market for fun, instead of checking football scores!

Future directions

We are expanding our series of pilots beyond Bristol to explore how the prediction market tool works in various types of institutions and departments. We still have bandwidth to include more institutions in the pilots, so do contact us if your institutions may want to take part. Once these are complete, we plan to publish results from the full set of studies in a paper, with our collaborators at the University of Innsbruck and Stockholm School of Economics.

In the next year we also aim to develop a more specialised and optimally user-friendly interface for the prediction market tool through Jisc, based on feedback. Ultimately, we hope the tool may be useful for other areas of research assessment outside the REF – after all, the REF isn’t the only context where research quality is difficult to assess! We’re betting that in all sorts of areas, the old adage may prove to be correct: two heads are better than one.

Jacqueline Thompson is a research associate at the University of Bristol; Marcus Munafo is a professor of biological psychology in Bristol’s School of Psychological Science; Ian Penton-Voak is a professor of evolutionary psychology at the University of Bristol.